2026: Agentic AI and Compounding Inference

How 2025’s hype, H100s, and hard lessons set up AI for 2026

And in the end, 2025 mostly did what everyone thought it would, albeit with all the chaos you’d expect when hype collides with physics and economics — like opening a Coke bottle laced with Mentos. AI adoption accelerated, infrastructure spending exploded, GPU clouds flirted with public markets, and Nvidia somehow ended the year even bigger than it started.

2026 won’t be any less dramatic; just that drama will spread to an increasingly nebulous infrastructure. Yes, adoption will keep ramping (watch the enterprise), capital will keep flowing (sometimes in circles), and IPOs and M&A will continue to come in waves. But the real shift will be in how and where inference works. Deals like Nvidia + (most of) Groq, the breakout of Google’s TPUs, and the brutal math of exploding token volumes are bursting AI out of centralized clouds and into metro and edge networks, CDNs, purpose-built platforms, on prem environments, and devices.

But agentic AI is the real accelerant (Mento?) in 2026. (Meta’s $2B Manus deal was a tell.) As we move from humans prompting models to machines coordinating with machines, inference demand scale starts compounding – turning latency, cost, reliability, and locality into first-order constraints. That’s what will make 2026 the Year of Agentic AI, and why all this inference and edge stuff goes from “interesting optimization” to “hard requirement.”

Measuring a year in hype, H100s, and hard lessons.

525,600 minutes is, if you’re (still) obsessed with Rent (disclosure: guilty😳), how you measure a year. But how we measure 2025 for how well tech and AI (and least importantly; i.e., most importantly, my predictions) performed, really depends on whether you want to ride the roller coaster of the year or just look at where it started and where it ended. Regardless, both end up at the same place.

So, first: where we started 2025.

Analysts predicted broad adoption of AI services.

Hyperscalers and others promised massive investment in AI infra.

Nvidia was on top of the AI world and with a stock price of around $140/share.

And on January 6, 2025, I published Welcome to the Year of the GPU Cloud IPO(s) - predicting CoreWeave’s IPO and imminent valuation explosion ushering in an AI-related IPO boom.

And, where we ended 2025* (NB: I’m using the first trading day of this year because nobody trades the last week of the year and the 1/2/26 closing price serves my narrative better. Thus the asterisk. Shut up.)

OpenAI weekly active users and revenue were up 2-3x to near 1B and >$12B. ✅

Hyperscalers, neoclouds and others invested ~$400B in data center and AI infra. ✅

Nvidia closed at almost $190/share on January 2, 2026 (+35% ish). ✅

CoreWeave closed at ~$80/share that same day – almost 2x its March IPO price. ✅

NBIS tripled in 2025 after resuming trading as a GPU cloud (and not Russian, swear); crypto companies like APLD and BTBT/WYFI decided they were GPU clouds; and Lambda, Crusoe, and others have bankers drooling. ✅✅✅

Oh and Nvidia closed the year with a $20B blockbuster M&A deal swallowing up Groq’s IP and its founder, the alleged brain behind Google’s TPU. 💰

Yep. Everything up and to the right. All predictions came true. Helluva year. Nothing more to see here re 2025. So now just… predictions for 2026 and check in again in a year?

Not so fast. Maybe we should zoom in a little first re a few “hiccups” from last year that made for a bumpy ride – courtesy of China, Michael Burry, and physics – to set the stage for what will definitely, maybe, probably happen in 2026. (And 50% of the time, I’m right every time.)

Zoom in to 2025.

First point: OpenAI growth. TLDR: it’s huge.

ChatGPT is at that tipping point where you’re surprised when you hear people don’t use it regularly and equally surprised that your father-in-law has been building home office desks under ChatGPT’s tutelage.

Enterprise adoption is a different story, supposedly hovering around 10% (of something), but enterprise capitulation to total AI adoption (like what happened with cloud some time between 2014 and now) hasn’t happened yet — for some of the same reasons that cloud took a bit and a few new ones.

But I maintain that the ROI on AI implementation is (way) too high and (way) too hard for enterprises to ignore much longer and mass adoption is a matter of when not if. Case in point: JPMorgan already claims 50% AI adoption among employees. What does that mean for AI revenue now? Lord knows. But it’s better than 10% AI adoption by employees; so, there.

Next: Nvidia. While a ~35% move in a mega cap stock (with a market cap of $4-5T) is a nice year, this move required ample servings of intestinal fortitude:

😱NVDA started the year around $140/share, but tumbled after the January 20, 2025 DeepSeek paper dropped because, despite Project Stargate “debuting” the next day (“completely by coincidence”), investors decided that AI didn’t need many GPUs anymore since models would be more efficient.

📈By the end of April, NVDA bottomed at just under $100 before investors started to realize that Nvidia was indeed continuing to sell a boatload of these chips, which was confirmed in mid May with a(nother) blowout earnings report. The first half of the year was now a wash for the stock.

🧐The stock peaked at >>$200/share (and a $5T valuation) right around when Michael Burry started telling people about GPU depreciation and circular deals after which the stock drifted down to where it is now (i.e., up ~35% for last year).

And finally: CoreWeave.

😱After scaling back its March IPO (see above re DeepSeek rendering GPUs “useless”), CRWV debuted and hovered around $40 before bottoming near $35. (“Year of the GPU Cloud IPO(s)” my ass….)

📈As investors realized that this AI thing was rather important and CoreWeave snagged a few more monster contracts and reported some monster numbers, the stock climbed to >$180/share and I took a victory lap (while I could).

🧐The stock bounced between $100-150/share until they reported strong 3Q25 numbers, but pushed out 4Q25 revenue as data center build-outs took longer than expected. Still, here we are somewhere in the $80/share vicinity and roughly double the IPO price and the bottom).

2026: Another year, another layer.

We enter 2026 a bit rattled and perhaps jaded, but IMO the underlying thesis remains intact; specifically, that software architecture is collapsing down to a single logic layer running on GPU- or GPU-like silicon in newly built data centers; i.e., the (>)$1T (GPU) cloud pivot I laid out here calling for the entire world’s digital infrastructure to be rebuilt from scratch. (Sort of.)

And my new favorite new big fuzzy numbers* are token consumption versus token pricing. (The asterisk means that fuzzy numbers are sorta made up, but directionally accurate – maybe, and serve my narrative – definitely.)

Simply put: AI token consumption is growing at 10x (or 900%) per year while token cost is falling 50% (or 0.5x) per year.That aforelinked OpenRouter / a16z report shows aggregate token usage on OpenRouter has grown to >100T tokens from ~10T tokens a year earlier. The same report shows a current median effective inference cost of ~$0.73 per million tokens. Independent analyses of corporate AI spend indicate that effective costs were roughly $1-2 per million tokens in early 2024, implying an approximately 50-65% decline over ~18-24 months. So let’s use 50% per year because it’s a nice round number.

What does that mean? As my new BBFF (extra B is for banker BFF) described: it’s Moore’s Law on steroids.

What does this all mean for 2026?

First prediction: AI adoption will continue to ramp. Duh.

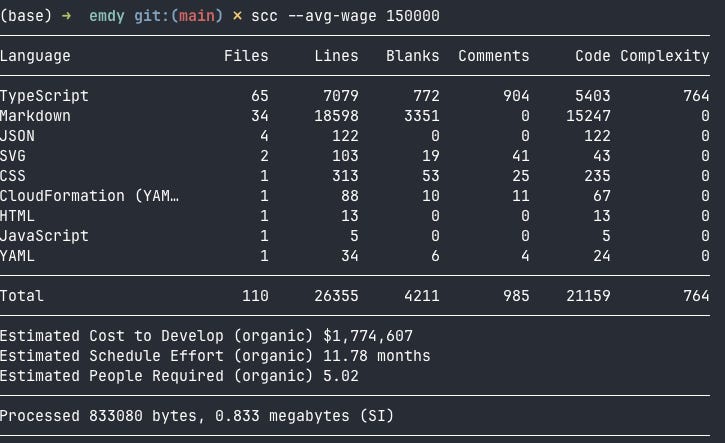

The riskier call: enterprises will hit an adoption tipping point. Why? Because the ROI is too hard to ignore. Aside from ~50% of JPMorgan employees reportedly adopting AI, software development productivity alone is experiencing orders-of-magnitude improvement. See the data below? Yeah, I have no idea what it really says either but…

…this is from a twice-exited technical founder friend that is paying Anthropic $200/month for Claude Code to do almost $2M/year of work… in one week. (So multiply that improvement by 52 because AI doesn’t take vacations.)

Second: data center investment will continue to grow. (Duh again.)

The wrinkle is that we should expect deployment (and revenue recognition) to (continue to) be lumpy and bumpy; i.e., the CRWV 4Q25 revenue push out will not be the last time we see something like that. BUT, for as many times as we see deployments pushed because something (literally) explodes or the permitting office was closed because of the flu, we’ll also see wild swings on the upside as growing pipeline gets “suddenly” deployed.

How do we trade that? Honest answer: I have no idea; I don’t trade and shouldn’t be allowed to trade. (My long-term investment performance on early sector-formation calls is better than my trading prowess, because the latter sucks.)

Third: IPOs and M&A.

Lambda supposedly hired bankers to IPO and word is they’re raising a few hundred million dollars prior in a round that converts at a discount to an eventual IPO valuation. Crusoe has had similar rumors; disclosure: I have equity in Crusoe via an SPV that bought shares in the Series D; that said, the Crusoe IPO rumors have been shared with me by unrelated parties.

Of course, if CoreWeave continues to suck wind, those IPO prospects will look a lot bleaker. But don’t fret. If 4Q25 scared the hell out of public equity investors, it didn’t have the same effect on big tech corp dev teams given the December announcements about Meta buying Manus and Nvidia (sorta) buying Groq.

Fourth: inference and edge.

This isn’t so much a prediction as it is a rehashing of my points on edge inference laid out here, here, and here. TLDR: inference is the new AI battleground where ultra-low-latency, cost-efficient execution at massive scale will be won at the edge, in CDNs, and with purpose-built platforms.

After building and training models (which, yes, we’ll still do), the industry is now focused on how models will run cheaply, reliably, and fast at scale, because inference is where AI actually touches users, products, and revenue — and where the real infrastructure economics show up.

Three signals from 2025 made this unavoidable (because there are always three).

Nvidia’s ~$20B Groq deal was many things, including a bet on inference IP, deterministic low-latency execution, and owning the next bottleneck.

Google’s TPUs finally broke containment, proving that purpose-built inference silicon plus tight software integration can drive massive volume at very low effective cost.

The math caught up with the narrative: token consumption is growing ~10x per year while effective token costs are falling ~50% per year. That combination doesn’t just enable more AI usage; it demands a different infrastructure topology.

Which brings us to the edge. Inference is already larger than training by most practical measures (revenue, token consumption, deployment footprint) and is poised to be multiples larger as usage scales. But routing trillions of inference calls through a handful of centralized regions quickly runs into the limits of physics, networking, and economics. In 2026, that pressure will push inference workloads outward into CDNs, edge networks, purpose-built inference platforms, on-prem environments, and on-device.

One more thing: The Year of Agentic AI.

If 2025 was the Year of the GPU Cloud IPO(s) (was it?), then 2026 will be the Year of Agentic AI (will it?) Last year in The Machine-Native Network and The Machine-Native Trade, I argued that we’re moving from humans prompting models to machines coordinating with machines; i.e., agents that plan, negotiate, retry, observe, and act across networks and markets with minimal human involvement. That shift is profound because agentic systems don’t just generate more tokens; they generate orders of magnitude more inference events. Every decision branches, every action triggers feedback, and every loop compounds compute, latency sensitivity, and reliability requirements.

Agentic AI turns inference from linear into recursive computing. Consequently, latency, cost, reliability, and locality all matter more as agents wait on each other, loop endlessly, and act in real time on real systems, which turns an already hard infrastructure problem into an exponentially harder one.

The final accelerant, From the Porch.

So yes, inference and the edge are the battleground, but agentic AI is the accelerant. In 2026, the entire machine-native economy will demand inference that is faster, cheaper, more deterministic, and closer to where decisions are made. That’s what turns (edge) inference from an optimization into a requirement — and what makes 2026 the year this all stops being theoretical. Agentic AI is where everything stops adding up and starts compounding.